Teaching microrobots to dream

’Our goal is to create microrobots that can intelligently navigate the human body, adapting to its complexity in real time,’ describes Daniel Ahmed, outlining the objective of their project, which is supported by the European Research Council (ERC) through the Starting Grant SONOBOTS. Microrobots hold immense promise for the future of medicine. They could one day deliver drugs to hard-to-reach tumors, clear blockages in blood vessels, or perform microsurgeries all without invasive procedures. However, controlling microrobots inside the human body presents significant challenges. At such small scales, traditional sensing and navigation technologies like GPS or LiDAR are impractical. Instead, microrobots must rely on indirect actuation methods such as magnetic fields, light, or ultrasound.

Among these, ultrasound offers a promising non-invasive approach, capable of deep tissue penetration and tunable propulsion. Yet, steering microrobots with ultrasound is exceptionally complex. Even more so in a data-scarce, high-dimensional environment and dynamic environment like the body, where real-time control must account for fluid flow, tissue interactions, and the microrobots’ variable responses to acoustic signals.

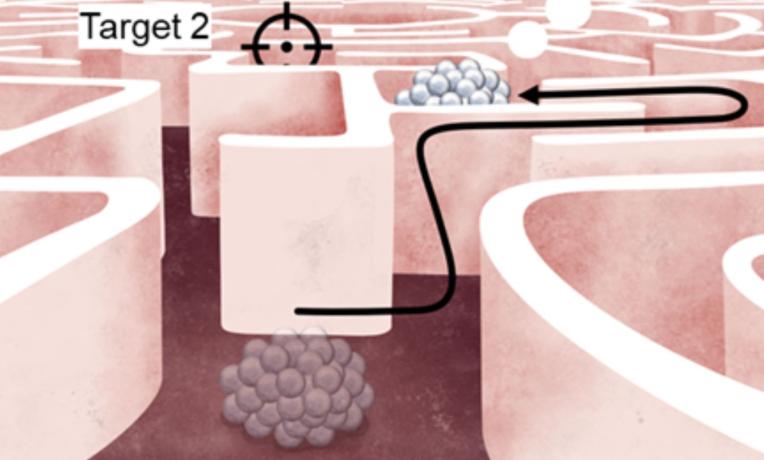

To overcome these challenges, Ahmed’s team developed a new method that allows microrobots to "dream" in simulated environments. ‘We created virtual worlds that mimic the physics of real-world conditions, enabling the microrobots to learn how to move, avoid obstacles, and adapt to new environments,’ explains Daniel Ahmed. ‘These skills they later apply in real biomedical settings.’

At the core of their research is model-based reinforcement learning (RL), a form of artificial intelligence that allows machines to learn optimal behaviors through trial and error. Unlike traditional RL, which demands vast datasets and long training times, this approach is sample-efficient and tailored to the constraints of microrobotic systems. The microrobots learn from recurrent imagined environments—essentially, simulations that mimic the physics of real-world conditions.

In experimental trials, microrobots pre-trained in simulation achieved a 90% success rate in navigating complex microfluidic channels after one hour of fine-tuning. Even in unfamiliar environments, they initially succeeded in 50% of tasks, improving to over 90% after 30 minutes of additional training. These capabilities were further validated in real-time experiments involving static and flowing conditions in vascular-like networks.

‘This shows that ultrasound-driven microrobots can learn to adapt in real time,’ says Mahmoud Medany, co-lead author of the study. ‘It’s a step toward enabling autonomous navigation in living systems.’

By combining ultrasound propulsion with AI-driven control, Ahmed’s team is laying the foundation for a new generation of intelligent, non-invasive medical tools.

Publication in Nature Machine Intelligence.